Will ChatGPT replace Google Search Now

Hot Topic Decoded

Hot Topic Decoded

Chatbot GPT — What is ChatBot GPT

Try — https://chat.openai.com/chat

GPT-3 (Generative Pre-training Transformer) can interpret natural language text, and generate natural language. This will learn a better understanding than like of Google or Siri.

This machine learning model has analyzed billions of pages of the internet to model out natural language — it can recognize and imitate patterns of language, which are customizable.

GPT-3 understands models of language. It can see which words are connected to other words, and why they’re connected in a particular order, based on what it takes from the internet.

GPT-3 is an extensive language model built to be used for a wide variety of natural language processing tasks. The model is incredibly huge at 175 billion parameters and is trained on 570 gigabytes of text. At a high level, the model works to predict the next word in a sentence, similar to what you see in text messaging or google docs. This idea of generating the next word is based on tokens and is used for more than just the next word, but entire sentences and articles.

The model can be fine-tuned similarly to how we train neural networks or can be used in a shot learning method. Few-shot learning is the process of showing GPT-3 a few examples of a task we want the model to accomplish and the correct result, such as sentiment analysis, and then running the model on a new example.

This learning method is incredibly useful and efficient as it allows us to start getting results for our task without long training and optimization. With just a few examples of tweets and their sentiment, we were able to achieve 73% accuracy with few-shot learning!

Example 1) My Question is : What is State of AI in 2035

Example 2 ) My Question is : GPT 3 AWS

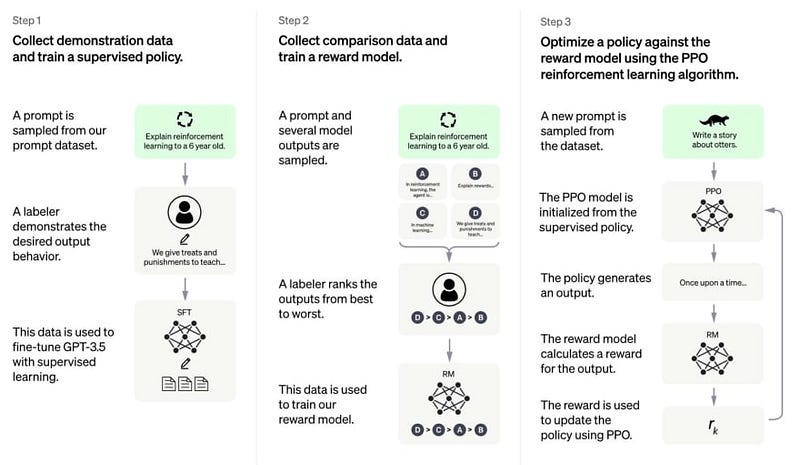

How it works?

This is the third generation of GPT — The previous generation GPT2 has 1.5 Billion contains Parameters but GPT-3 is beast of 155 Billion parameters

Will it Replace Google Search ?

GPT 3 Training in AWS

[Amazon FSx for Lustre](https://aws.amazon.com/fsx/lustre/) high-performance Storage with Amazon EC2 Trn1 instances, powered by [AWS Trainium](https://aws.amazon.com/machine-learning/trainium/) accelerators, are purpose built for high-performance (DL) training while offering up to 50% cost-to-train savings over comparable GPU-based instances. Trn1 instance supports up to 800 Gbps of Elastic Fabric Adapter networking bandwidth. Each Trn1 instance also supports up to 80 Gbps of Amazon Elastic Block Store (EBS) bandwidth and up to 8 TB of local NVMe solid state drive (SSD) storage for fast workload access to large datasets.

Amazon EC2 Trn1 Instances for High-Performance Model Training are Now Available

Join our Group: https://www.meetup.com/aws-data-user-group-bangalore

Join Cloudnloud Tech Community for Training, Re-Engineering, and career opportunities.

Follow Page 👉 — https://lnkd.in/dJNeuhYA

Follow Group 👉- https://lnkd.in/e4V7bkgP